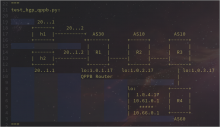

We need to consider the ability to implement QPPB in VyOS. This feature is very useful for centralized dynamic QoS configuration.

Some references to existing implementations in other vendors:

https://www.cisco.com/c/en/us/td/docs/ios-xml/ios/iproute_pi/configuration/xe-16-10/iri-xe-16-10-book/iri-qos-policy-prop-via-bgp.pdf

https://infocenter.nokia.com/public/7750SR217R1A/index.jsp?topic=/com.nokia.Layer_3_Services_Guide_21.7.R1/qos_policy_prop-ai9enrmmb9.html

https://support.huawei.com/enterprise/en/doc/EDOC1100055105/f5d5d02a/example-for-configuring-qppb-bgp