hi,

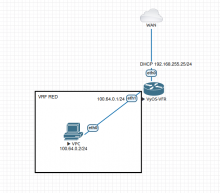

I start using VRF and stumbled over a nasty nat bug:

Device:

eth0 192.168.0.100/24 gw 192.168.0.1 VRF OOBM

eth1 192.168.0.1/24 VRF default

eth2 no IP VRF default

pppoe0 dynamic public IP from ISP VRF default

eth0 and eth1 are conntected to the same switch and can ping each other

NAT RULE:

set nat source rule 100 outbound-interface 'pppoe0'

set nat source rule 100 protocol 'all'

set nat source rule 100 translation address 'masquerade'

The nat works for all other devices in 192.168.0/24. But all packets from 192.168.0.100 goes without masquerade out of pppoe0.